I wrote a post a couple of years ago where I looked at how Mormon-related movies were rated on IMDB. I thought it would be fun to do a similar look at how Mormon-related books are rated on GoodReads.

I got ratings for 568 books, mostly Mormon-related, but with a few others for comparison. The Mormon-related ones were scriptures, Church-published materials like manuals, and books by GAs, Mormon studies people, and popular Mormon authors (e.g., Jack Weyland, Anita Stansfield). The non-Mormon ones were a few Bibles, the Left Behind series, the top 10 fiction and non-fiction books on the GoodReads lists (I’m not sure exactly of the criteria for these), and 20 fiction and 20 non-fiction books I was hoping would be more representative of average books, so I chose them off of GoodReads user-created lists that had nothing to do with the book content (one was strange titles and the other was interesting covers). I required a book to have at least 50 ratings to be included, although I made exceptions for three extra bad ones I was interested in: the ERA-era book Woman, written by a bunch of GAs, the priesthood/temple-ban-justifying Mormonism and the Negro, and the mansplained classic Woman and the Priesthood. Note that I’ve gathered this data in bits and pieces over the last month or so, so some of the numbers might be a little out of date.

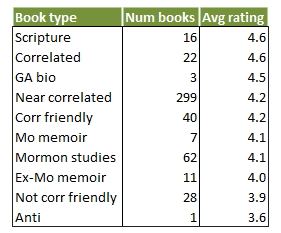

I assigned the books to categories depending on how Correlation-friendly they were. Unfortunately, I’ve only read a small fraction of the books, so I didn’t have firsthand knowledge in most cases. However, most books make pretty clear what type they are. For example, Mormon studies books are typically published by university presses. More correlated books are typically published by Deseret Book or Shadow Mountain. Anyway, this table shows, for all the Mormon books, the number of books in the sample and their average rating on the 1-to-5 scale used by GoodReads.

In my post on movies, I speculated that there would be higher ratings for movies produced by the Church, or that were scripture-adjacent, and that did turn out to be the case. It looks like there’s a similar pattern here, as scriptures rate the highest, and generally categories rank lower in average rating as they move further from being correlated. Here’s a brief explanation of what falls into each category:

- Scriptures — LDS scriptures in different forms (e.g., Book of Mormon separate vs. Triple Combination) plus some non-LDS editions of the Bible.

- Correlated — Church manuals and books that have essentially become manuals (e.g., Jesus the Christ).

- GA biography — Biographies of GAs.

- Near correlated — Nearly all books by GAs, as well as books by people like John Bytheway who are striving to be correlated, and novels by writers with similar goals (Gerald Lund, Chris Heimerdinger).

- Correlation friendly — Books that aren’t quite trying to be correlated, but are still very Church-friendly, like many of the Givens’s books, Hugh Nibley, and some of Patrick Mason’s.

- Mormon memoir — Memoir of someone who’s still Mormon (e.g., Leonard Arrington’s Adventures of a Church Historian).

- Mormon studies — Any look at a Mormon topic from a scholarly perspective, so for example Maxine Hanks’s Women and Authority, or John Turner’s biography of Brigham Young, or Kathleen Flake’s book about the seating of Reed Smoot in the US Senate.

- Ex-Mormon memoir — Memoir of someone who left Mormonism, regardless of hostility level, so everything from Martha Beck’s Leaving the Saints to Katie Langston’s Sealed.

- Not correlation friendly — This includes books with ideas that GAs would generally frown on, even if the writers aren’t hostile to the Church, so for example anything by Carol Lynn Pearson that’s too kind to gay people or too open to Heavenly Mother or rejecting polygamy.

- Anti — The only book I got in this category is Ed Decker’s classic The God Makers.

The table just shows average ratings. I had hoped to look at some interesting patterns of review bombing, like I did on my post on movies (although I didn’t use that term), where people give the lowest possible rating to a book or movie in an attempt to affect the rating result rather than to express their real opinion of it. Unfortunately, with GoodReads’s much shorter 1-to-5 rating scale, compared with IMDB’s 1-to-10, it’s much harder to see evidence of it, as there are so many fewer values for ratings to be distributed in, particularly given that most ratings are in the upper half of the scale. (Both GoodReads and IMDB are now owned by Amazon. Why don’t they get it together and standardize things?)

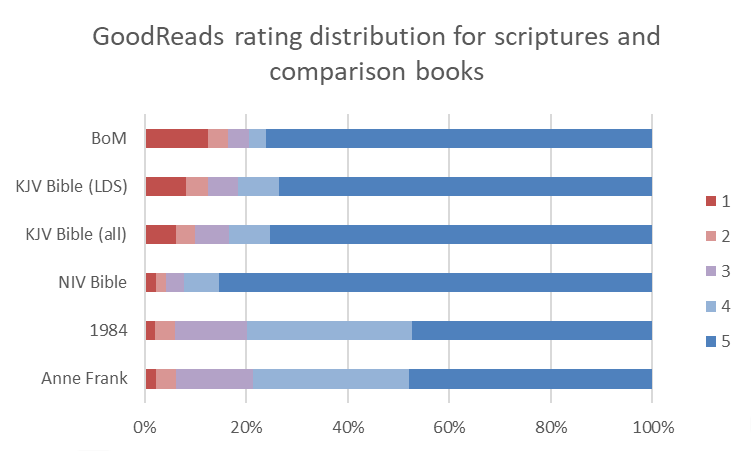

You still can see review bombing in the ratings of some books, though. Here are rating distributions for the Book of Mormon, the LDS edition of the KJV Bible, the KJV Bible as a whole, the NIV Bible, and two non-scripture books for comparison, George Orwell’s 1984 and Anne Frank’s Diary of a Young Girl. (One thing to note about the Bibles is that GoodReads is big on lumping together books that it considers different editions of the same book, and it does this pretty aggressively with the KJV, but less so with the NIV, where I just picked one among many NIV Bibles.)

All the scriptures receive far more 5 ratings than any other value. What’s unusual, though, for the KJV Bibles and especially for the Book of Mormon, is the amount of review bombing there is. As a quick and easy measure of the level of review bombing, I take the difference between the number of 1’s and the number of 2’s as a percentage of the total number of reviews. For the Book of Mormon, 4% give it a 2, and 12% give it a 1, for a difference of 8 percentage points. The KJV Bible has the same pattern, although it’s not nearly as pronounced, with 4% 2’s and 6% 1’s, for a difference of 2 percentage points. Nineteen Eighty Four and Diary of a Young Girl show a far more typical pattern, both receiving 4% 2’s and 2% 1’s, so the measure is negative (minus 2 percentage points), suggesting no review bombing. Interestingly, the NIV Bible I included also doesn’t show evidence of review bombing. I didn’t look at a whole lot of Bibles, but of the ones I did look at, it looks like the animosity, including also the sarcastic reviews about how the Bible is just ridiculous fiction, were mostly concentrated in the KJV reviews.

The review bombing index of 8 percentage points for the Book of Mormon is exceeded by only four books in my sample. Two are my two of my three exceptions to the minimum 50 ratings rule, Woman (11 percentage points) and Mormonism and the Negro (17). The third is Wendy Nelson’s infamous grace-denying book The Not Even Once Club, which scores at 31. The last is an amazingly awful-sounding anti-birth-control book (to be clear, not a Mormon one) that scores a 70! Of course, with numbers as high as these last few, it seems like maybe people are actually giving the books low ratings rather than just review bombing them.

Of course review bombing can go the other way too (review enhancing?), with people giving books the highest possible rating with the intent to influence the rating result. I have no doubt that this happens too, but with books rated so high overall, I can’t think of an easy way to detect it. How do you tell the sincere 5’s from the review-enhanced 5’s? Again, it would be great to have IMDB’s 1-to-10 scale, so the 8’s from people who liked a book could stand apart from the 10’s who were maybe review-enhancing.

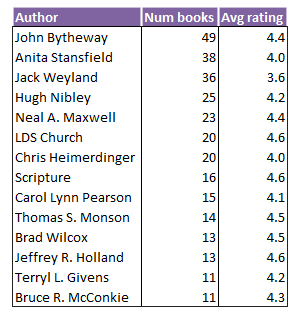

Before I get away from average ratings, I did want to show you the average ratings by author for the people who have 10 or more books in my sample.

I don’t know that there’s much interesting here other than that the fiction writers (Stansfield, Heimerdinger, and especially Weyland) don’t do as well as the non-fiction writers. I think this might be an instance of a more general pattern. It’s just a general sense I have, but I think that the highest-rated non-fiction on GoodReads tends to be rated higher than the highest-rated fiction. I wonder if this is because it’s easier to know what you’re going to get going in when you pick up a non-fiction book than a fiction book, so people who won’t like a book can more easily screen themselves out of reading non-fiction they won’t like in the first place, leaving only the more enthusiastic readers to rate such books. I don’t have any data to back this up, though.

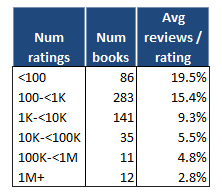

One other issue I was interested in is the percentage of people who rate a book who also write a review. It’s typically just a small fraction. The Book of Mormon has 8% as many reviews as ratings. This is a low value in comparison with books in general, so I at first thought that it was evidence that people were just rating the Book of Mormon a 5 as a kind of low-effort marker of Mormonness. But after looking at a bunch more books, I realized that the more ratings a book gets, the fewer reviews it gets per rating. Here’s a summary table from my sample.

Although the numbers of books in the table isn’t large, particularly in the lower rows, the consistency of the pattern does give me some confidence that it’s real. For books receiving less than 100 ratings, almost 20% of raters also write a review. But at the other end, for books receiving a million or more ratings (in the table, K=1,000 and M=1,000,000), fewer than 3% also write a review. I think this makes sense from the perspective of GoodReads users hoping to have their review read. If a book doesn’t have many ratings, you can write a review and actually have some people read it. But if there are already thousands or millions of ratings, with hundreds or thousands of reviews, your review is likely to be buried.

The Book of Mormon has about 83,000 ratings, so it falls into the fourth row in the table. This means its 8% of raters writing a review is actually higher than the average of 5.5%. So good on raters for also reviewing! Or maybe this is just evidence that it’s a book that people have strong opinions about, so they really want to get their opinion in!

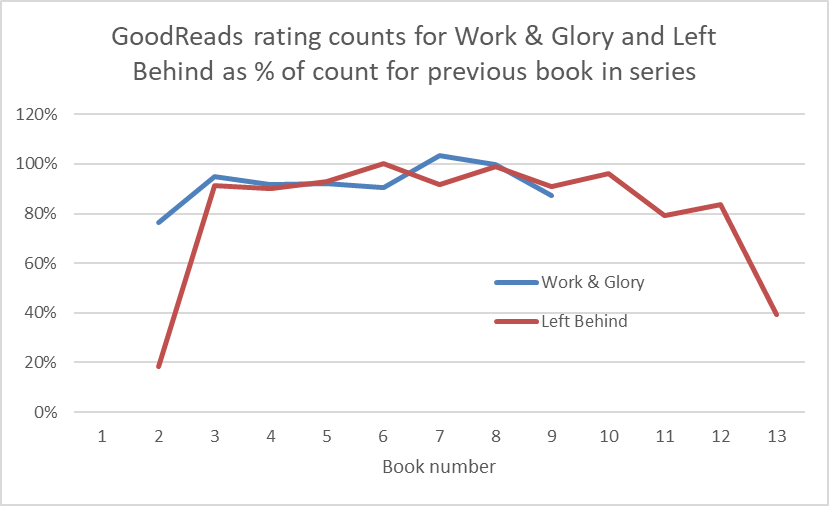

One last thing I looked at, and it’s kind of random, is a comparison of Gerald Lund’s The Work and the Glory series with Tim LaHaye and Jerry B. Jenkins’s Left Behind series. Although one is about the past and the other about the future, I think of them as maybe filling similar roles for Mormons and evangelical Christians in that they’re a series you can read to feel good about how your religion is right. Also, they’re probably connected in my head because I worked in a couple of public libraries, one in Utah and one in another state, in the 1990s and 2000s, and I fielded a lot of patrons’ requests for these books.

I was hoping that the ratings of these books might prove interesting, but they really weren’t. The Work and the Glory are all rated very close to 4.3 stars (ranging from 4.28 to 4.37), and Left Behind are all rated close to 4 (ranging from 3.84 to 4.03). The number of reviews for each book in the series is kind of interesting, though.

Left Behind started out with a bang, as over 220,000 people rated the first book. But then the number of ratings plummeted to just over 40,000 for the second one. After that, though, the number of ratings per book leveled off and declined more slowly for the rest of the series. The Work and the Glory started much lower, at about 16,000 and dropped some, but much less. On the scale of the graph, it’s hard to see. This next graph fixes that by converting review counts for each book in each series to percentage of review count for the previous book.

Here it’s clearer that each series suffered its biggest dropoff between the first and second books (over 80% decline for Left Behind, and over 20% for The Work and the Glory). After that, both settled into having only slight dropoffs from book to book. Left Behind has several more books than The Work and the Glory, and it looks like the last book in particular had maybe lost its audience, as the number of ratings fell by over 60%.

That’s all I’ve got. I had some other ideas of fun things I might poke into, like possible differences in ratings between different translations of the Bible, but they really didn’t pan out with interesting patterns. For example, there are lots of Bible translations appearing in various study Bibles, and it would be hard to know if any differences in ratings were for the translations versus for the supplementary material. Plus most Bibles, like the scriptures in my sample, rate really high, and there’s just not much variation between them to talk about. Anyway, please let me know in the comments if there are other things you think might be fun for me to look into, and if I have the energy, I’ll see if I can get to them for a future post.

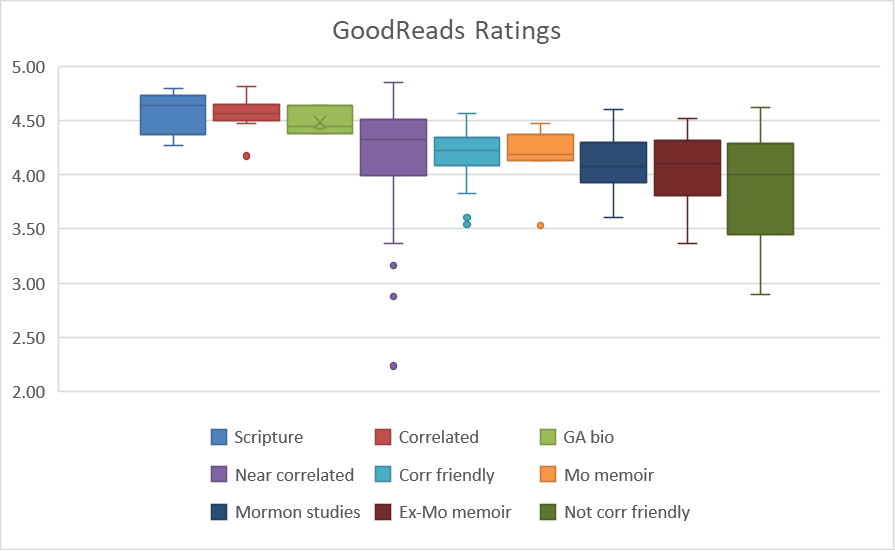

Addendum: DaveW suggested making box plots to show the distribution of ratings for each book type. Here they are. Sorry for the strange labeling. I used Microsoft Excel’s box plot maker, and it resists simply putting the types on the X-axis.

I’m trying to come up with a better way to filter out the review bombs. I have an idea, but it is complicated.

1. Identify a control group of books. Your “strange titles” and “interesting covers” lists are a start, but a larger list would likely be needed to do what I have in mind.

2. Rerate the books in the control group using a variation of the method used for diving scores–ignore all of the 1s and 5s and average only the middle three scores.

3. Run two regressions on the control group–one to estimate the percentage of 1s and another to estimate the percentage of 5s, both using the diving score as the explanatory variable.

4. Impute expected percentages of 1s and 5s to books in the test groups by applying the regression coefficients to the diving scores of those books.

5. Compare those expected percentages to the actual percentages of 1s and 5s.

The regressions may turn out to be too noisy to be useful, but I think it’s worth a shot. If you want to send me your data, I’ll beef up the control group and crunch the numbers myself.

I have a couple of ideas. First, how about a box plot of ratings categorized by book type? Of those 22 books, are they all close to the 4.6 average, or are there a couple of real stinkers in there? It feels like for the books you’ve put in the correlated and near-correlated categories, those books are mostly going to attract folks that might find it difficult to give them bad ratings, even if they didn’t particularly like the book. My expectation is that the correlated and near-correlated groups are more tightly bunched than the memoirs and Mormon studies groups. Even adding a standard deviation to the table might be interesting.

What about splitting up the books by authors position in the church? Do Apostles get better reviews than 70s? Do books from members get better reviews than books from non-members? I know it might be difficult to establish the category for all the books, but you could probably do many of them pretty quickly. Some might be hard to place; Brad Wilcox is currently in the YM general presidency, but I still think of him a primarily an EFY speaking circuit guy.

In both cases, maybe the analysis I’m suggesting turn out to be completely uninteresting. Who knows.

Last Lemming, I’ve sent you the data. I’m interested to hear what you find!

DaveW, thanks for the suggestions. I had made box plots by category when writing the post, but they didn’t seem too interesting so I didn’t put them up. I’ve gone back and added them at the end.

I’m just shocked that John Bytheway wrote 49 books. Wow. That is a lot in general, and quite a lot for a non-fiction author.

Oh, sorry, Hokiekate. I should have commented on that. For him, as well as for some GAs, many of their books are just recorded talks (which I think GoodReads then counts as audiobooks). Here’s his list if you want to look:

https://www.goodreads.com/author/list/126807.John_Bytheway

Really interesting post! I’ve been on Goodreads for over a decade. It’s been one of my most satisfying social networking experiences. I gave 3-star ratings to the Book of Mormon as a standalone and to the Triple Combination. I was definitely in ax-grinding mode when I wrote my reviews for those. Also 3-star rating for the LDS edition of the KJV. Here are a couple quotes from that ax-grinding review: 1) Charlton Heston’s Moses is a cool dude. The Bible’s Moses scares the hell out of me (or should I say into me); 2) “Song of Solomon” is a truly beautiful poem. And it talks about boobs a lot. (Later I suggest the Old Testament should be rated NC-17.)

Looks like I lean toward 3 stars for correlated and near-correlated titles. 4 stars for more uncorrelated scholarly works, especially and admittedly if I’m in the choir being preached to. And wow, I gave Truth Restored 2 stars. Take that, Pres. Hinckley! Must have been having a bitter day when I wrote that review. *checks review* Yup, I’m railing against correlation on that one.

On the other side, I gave 5 stars to Adventures of a Church Historian. That was a great read. Generally, for any genre, I give lots of 4s. Like you suggest, we tend to commit to books we have a pretty good chance of enjoying. As a personal rule, again with any genre, I only give 5 stars to books I deeply connected with. I don’t actually give the 5th star as an indication of literary quality. It’s just an expression of having found the book incredibly engrossing and feeling like it speaks to my soul.

Mystifyingly, I gave Fawn Brodie’s No Man Knows My History 3 stars. I must have been trying too hard to appear objective after my ax-grinding reviews of the scriptures. Pretty sure I’d give it 4 in a re-read. And I’ve always encouraged people to read Brodie’s book as a pair with Rough Stone Rolling. Read’em both as a double feature I say! Balance things out.

Thanks, Jake C, for your explanation of how your rate books on GoodReads. It’s really interesting to hear! I admit that I read what other people write on GoodReads, but I’m a total freeloader, and at least as yet, I’ve never even rated a book that I’ve read there, let alone reviewed one.

For Jake C:

https://songmeanings.com/songs/view/3530822107858853042/

(I would not post this here if ZD were not the most humor-friendly blog in what’s left of the Bloggernacle. Ziff, of course, can delete it if it crosses the line.)