A few months ago, The Baron argued in a post at Waters of Mormon that a weakness of the MPAA movie rating scheme is that it considers only the movie’s worst content category (of violence, profanity, and sex). For example, if a movie has enough profanity to get an R rating, the R says nothing about its levels of violence or sex. Such a movie could have any combination of levels of violence and sex, from none at all up to enough to warrant an R rating on their own even without the profanity.

The Baron pointed out that this practice of rating movies by only their worst type of content might set up an odd incentive:

this only encourages filmmakers to add more “R-rated” content to their movie, since obviously if they know they’re getting an R for violence already, why NOT add a lot of profanity and nudity as well? The rating is going to be the same, either way

This had never occurred to me, but I can see his argument that the rating system would create this incentive. His unstated assumption, though, is that movie makers want to put as much violence, sex, and profanity into their movies as they possibly can. I doubt that that’s actually the case. While I suspect they probably chafe at times at restrictions that trying to get a particular rating might place on them, I would be surprised if getting lots of offensive material in is often one of their major goals.

So which is true? Are movie makers anxious to put lots of offensive content into their movies, or not? What’s fun about this question is that there’s data I can use to try to answer it.

The Baron’s argument leads to the prediction that there aren’t any (or many) “soft” R rated movies. Makers of movies, once they know a movie is going to get an R rating, will typically put extra offensive material in. So there should be relatively few R rated movies that just barely exceed the R threshold, compared to the larger numbers of PG-13 rated movies that fall just below it and “harder” R rated movies that fall well beyond it.

My competing argument that the PG-13 to R threshold has little effect on movie makers leads to the prediction that there will be no dip in the number of movies at the “soft” end of R ratings.

To test these competing hypotheses, I’ll use data from Kids in Mind, a website that rates movies from 0 to 10 in three categories: sex/nudity, violence/gore, and profanity. As of November 21st, they had ratings for 2883 movies, and their ratings, along with MPAA ratings for the same movies, are the data I’ll be using.

Is the number of “soft” R rated movies smaller than you would expect?

Based on the Kids in Mind (hereafter, KiM) data, no, I don’t think so.

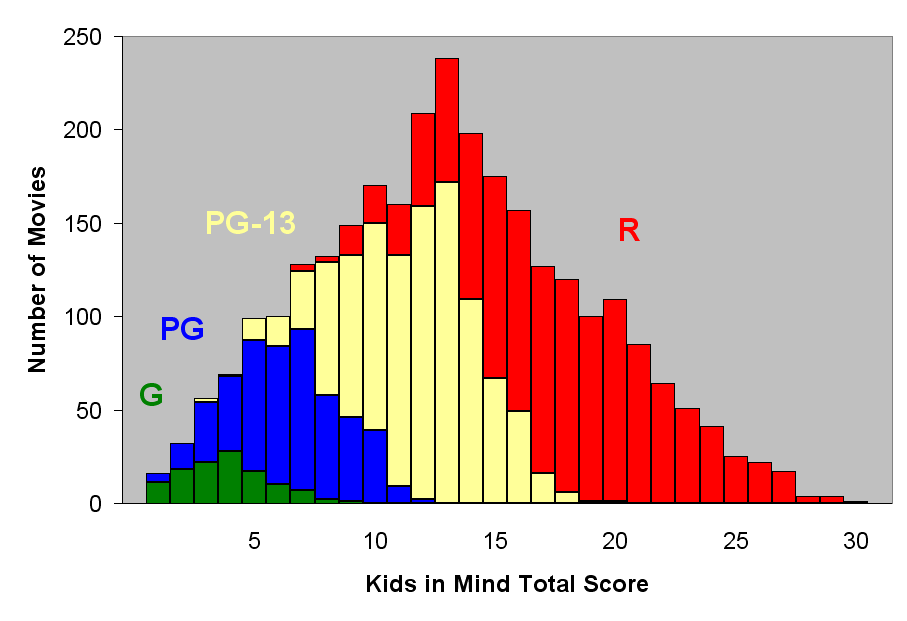

Here’s a figure that tries to answer the question. The scores along the bottom are sums of KiM scores for each movie (sex + violence + profanity) and the height of the bars represent numbers of movies. The colors of the parts of the bars represent movie ratings: G is green, PG is blue, PG-13 is yellow, and R is red. (3 NC-17 and 22 unrated movies are excluded.)

Answering the question is complicated by the fact that KiM sum scores do not predict MPAA ratings perfectly. But the two are clearly strongly related, so I can at least give it a shot.

Notice as you look left to right in the figure that R rated movies start to appear almost as soon as do PG-13 rated movies. The first real concentration of PG-13 rated movies is at a KiM sum of 5. R rated movies appear only a little later at 7. More generally, the KiM sums at which PG-13 rated movies are most common almost all have a fair number of R rated movies at the same sum. Or consider this: the most common KiM sum for a PG-13 rated movie is 13. But over a quarter of movies having that KiM sum are rated R.

If The Baron’s hypothesis were correct, this figure would show a much greater separation between PG-13 and R rated movies, with the buk of the R rated movies not appearing until farther to the right, where the number of PG-13 movies is declining. Instead, the whole set of movies forms a pretty smooth distribution, with lots of movies toward the middle KiM sum ratings, fewer toward the extremes, and no really obvious breaks.

Here’s a table that attempts to answer the same question. For each MPAA rating (other than NC-17), I’ve listed the number of movies receiving that rating as well as the minimum, median, and maximum KiM sums for those movies. In the last two columns are the percentages of movies having that MPAA rating whose KiM sums were less than or equal to the median or maximum KiM sum for the next “softer” MPAA rating. Sorry–I know that’s a mouthful. It might be clearer with an example. If you look at the PG line, it says 19 under the column for the median. This means that 19% of PG rated movies had KiM sums lower than or equal to the median (middle) for the G movies. Where it says 89 in the max column, this means that 89% of PG rated movies had KiM sums lower than or equal to the maximum KiM sum for a G rated movie.

| MPAA rating | Number of movies | KiM sum (sex + violence + profanity) | ||||

|---|---|---|---|---|---|---|

| Min | Median | Max | Pct <= softer median | Pct <= softer max | ||

| G | 116 | 1 | 4 | 9 | n/a | n/a |

| PG | 472 | 1 | 7 | 12 | 19 | 89 |

| PG-13 | 1033 | 3 | 12 | 20 | 6 | 59 |

| R | 1237 | 7 | 18 | 30 | 10 | 75 |

Since higher numbers in these last two columns indicate more overlap, it looks like the G and PG rated movies overlapped a lot in their KiM sums. PG-13 and R rated movies also overlapped quite a bit (three quarters of R rated movies had a KiM sum no higher than the highest PG-13 rated movie). But the real break is between PG and PG-13. Only a little over half of PG-13 rated movies had a KiM sum less than or equal to the highest KiM sum for PG rated movies. This suggests that the rating categories are pretty different. So in spite of its name, the PG-13 rating appears to be a breakoff from the R rating and not from the PG rating.

Now I’ve strayed a bit from the original question, but once I’ve got a data set like this, why not play with it a little and see what other interesting questions I can try to answer? I understand, though, if you don’t want to continue with me.

How are the levels of sex, violence, and profanity in a movie related?

Here are the correlations1 between the KiM scores for sex, violence, and profanity. I’ve also thrown in MPAA ratings, which I simply entered as G=0, PG=1, PG-13=2, R=3, NC-17=4.

| Sex | Violence | Profanity | MPAA | |

|---|---|---|---|---|

| Sex | 1.000 | |||

| Violence | 0.253 | 1.000 | ||

| Profanity | 0.544 | 0.404 | 1.000 | |

| MPAA | 0.586 | 0.516 | 0.758 | 1.000 |

Hmm. So profanity goes with sex and violence more reliably than sex and violence go together. What’s really interesting, I think, is that of the three KiM scores, profanity is by far the most strongly related to MPAA rating. This leads to another interesting question:

Which of sex, violence, and profanity best predicts MPAA rating?

To answer this, I used a regression, which estimates how well each of the KiM ratings predicts MPAA rating, while taking into account that the KiM ratings are overlapping (that is, they are correlated among themselves). Profanity was the clear winner. For each 1 point increase in profanity, the predicted MPAA rating increased 0.17 points. Each 1 point increase in sex only increased the predicted MPAA rating by 0.09 points. Each 1 point increase in violence also increased the predicted MPAA rating by only 0.09 points.

I know these numbers can seem a little detached from the original question. So let’s consider an example. Say there’s a movie that rates 1-1-1 on the KiM sex, violence, and profanity scales. The regression predicts that the MPAA rating would be 0.982, or a standard PG given the 0 to 4 scale I used. What if sex increased 5 points to make a 6-1-1 movie? The regression predicts that the MPAA rating would be 1.433, or halfway between a PG and a PG-13. But what if instead profanity were increased 5 points to make a 1-1-6 movie? Then the regression predicts that the MPAA rating would be 1.834, solidly in PG-13 territory. So changing profanity clearly has a larger effect, even if the difference isn’t dramatic.

In using this regression, I assumed that transitions between ratings all worked the same. That is, I assumed that the three KiM ratings would predict the difference between G and PG the same way they predicted the difference between PG and PG-13. This assumption could be false. In fact, when I checked it using a different kind of regression model, the test of whether the KiM ratings predicted the same for all the adjacent rating category pairs suggested that this assumption was false. So I looked at the pairs separately.

Which of sex, violence, and profanity best predicts each difference between pairs of adjacent MPAA ratings (e.g., G vs. PG)?

To answer this, I used three logistic regressions, one for the G vs. PG difference, one for the PG vs. PG-13 difference, and one for the PG-13 to R difference. (There were so few NC-17’s that I didn’t look at the R vs. NC-17 difference.) This table shows some results from these logistic regressions. The values in the table are odds ratios. This means they tell how much the predicted odds of a movie being in the “harder” category are multiplied by for each one point increase in that particular KiM rating.

| KiM category | Odds ratios | ||

|---|---|---|---|

| PG vs. G | PG-13 vs. PG | R vs. PG-13 | |

| Sex | 2.03 | 2.18 | 1.40 |

| Violence | 1.07 | 1.78 | 1.60 |

| Profanity | 3.51 | 2.79 | 2.66 |

Again, I realize this might be a lot to wrap your brain around, so let’s try an example. Among G and PG movies, a movie having KiM ratings of 0-0-1 for sex, violence, and profanity has predicted odds of 1.19:15 of being rated PG. This translates into a 54% probability: 1.19/2.19 = .54. If sex were increased 1 point, the new odds would just be multiplied by 2.03, yielding 2.42:1, or a 71% probability. Alternatively, if profanity were increased by 1 point, the new odds would be 1.19 x 3.51 = 4.18, or an 81% probability.

Consistent with the results of the regression above, profanity is the best predictor for each pair of ratings. Its strength varies, though, as it’s a stronger predictor for the “softer” ratings pairs and the weakest for the R vs. PG-13 comparison. Violence, on the other hand, is not even a statistically significant predictor of the PG vs. G difference. In other words, it is not likely to be a true predictor at all. (Note that because odds ratios are multiplicative, an odds ratio near 1 means the predicted odds change very little.) It does predict differences for the “harder” pair comparisons, though. Sex, like profanity, predicts better for the “softer” ratings pairs.

So why is violence such a poor predictor of the G vs. PG difference?

The table below has mean KiM scores for each of the three categories for each MPAA rating. It looks like the problem is that violence is pretty similar in G (mean = 2.44) and PG (mean = 2.88) rated movies. Compare this to the much larger increases in mean KiM scores for the other categories as well as for violence beyond PG ratings, with increasingly “hard” MPAA ratings.

| MPAA rating | KiM means | ||

|---|---|---|---|

| Sex | Violence | Profanity | |

| G | 0.77 | 2.44 | 0.62 |

| PG | 1.69 | 2.88 | 1.89 |

| PG-13 | 3.54 | 4.14 | 4.00 |

| R | 5.02 | 5.95 | 6.80 |

What’s the stereotype about American vs. Eurpoean movies? Ours have violence and their have sex? This result is consistent with that stereotype: even G rated movies don’t really approach having zero violence. This leads me to one last question:

What’s typically the “hardest” content in movies? Is it really violence?

Yes, it is. Or at least the KiM ratings are consistent with this idea. I checked which of sex, violence, and profanity was highest for each of the 2883 movies. Violence was highest or tied for highest for 1508 of them (52%). The number for profanity was somewhat lower (1300, 45%) and for sex it was a lot lower (780, 27%, ties make the totals exceed 100%).

Okay, enough from me. What thoughts do you have about MPAA movie ratings and their relationship with types of movie content? Or please let me know if there are other questions you would like me to try to answer with this fun little data set. (Or point out errors in my analyses if you like.)

———————————–

1. Correlations range from -1 to +1. Positive values indicate that as values of one variable go up, values of the other variable go up too. Larger absolute values indicate stronger relationships.

2. Here’s the calculation: The predicted score for a 0-0-0 movie is 0.63, so the predicted rating is 0.63 + 1 x 0.09 (sex) + 1 x 0.09 (violence) + 1 x 0.17 (profanity) = 0.98.

3. Here’s the calculation: 0.63 + 6 x 0.09 (sex) + 1 x 0.09 (violence) + 1 x 0.17 (profanity) = 1.43.

4. Here’s the calculation: 0.63 + 1 x 0.09 (sex) + 1 x 0.09 (violence) + 6 x 0.17 (profanity) = 1.83.

5. Here’s the calculation: The predicted odds for a 0-0-0 movie of being rated PG are 0.34:1. Odds ratios are multiplicative, so this is multiplied by the odds ratio for each of the KiM scores, each raised to the power of the score (in other words, just repeating the multiplication that number of times). So 0.34:1 x 2.030 (sex) x 1.070 (violence) x 3.511 (profanity) = 1.19. (Recall that raising a number to the 0th power yields 1, so the odds ratios for sex and violence have no effect in this calculation.)

Ziff, your analysis here is dizzying. Or, maybe it’s just too early 🙂

I had a question about this sentence.

I think perhaps one of the PG-13 is supposed to be PG, but I’m not sure.

This is a great post, I’m sure film students around the world would love to get their hands on your work here.

Oops! Good catch, Jessawhy! Thanks–I’ve fixed that.

You made a number of false assumptions. First the number of movies made is not as significant as the cost of making them. Many artsy films cost a million or less where a Wall-E will cost 150+ million. Comparing those two movies as equals is a flaw in your motive dissertation above. Calculate the $$ and there is your motive. Movies that attract 15-21 year olds is where the money is. Star wars is heavy on the violence and is rated PG and PG13 an R rating would would have killed it before it ever got started. Titanic a very soft R for a relatively short nude scene (5-6 seconds of 3+ hours) benefited from the rating by bringing in older teens (15 and up) focused on romance. The vast majority of movies that lose money are R rated and do not target the under 21 crowd. They cost next to nothing to make and are not shown nationally or on many screens at any given time. The vast majority of money is spent to see PG-13 movies and your data does not show that.

The other false assumption you made is that you tried to connect a linear scale to movie ratings. This is certainly not linear or even consistent.

I guess my personal opinion is that the world is not trying to corrupt me and ruin my life. That is out of sync with most Mormons. I seldom even look at the ratings anymore, I try to find out the actual content and judge from there.

Are you saying violence is the “hardest” content because it is most prevelantly the highest number of the three?

Good question, Matt. I don’t think so. It looks more to me like violence is the most constant so the MPAA ratings are least sensitive to it.

Jerry, certainly you make a good point that it would be even more interesting to see how much money was spent to make movies of various ratings rather than just a count of them. Do you have a hypothesis about how looking at cost to make movies would change the results of any of the analyses I did?

I agree that the MPAA ratings don’t fall precisely on a linear scale. The figure in the post certainly supports that idea. There is lots of overlap, particularly between G and PG, and between PG-13 and R. But the ratings certainly approximate a linear scale. Look back at the last table. Each increasing step in the MPAA ratings is associated with an increase in average sex, violence, and profanity by the KiM scale.

This is a brilliant analysis of movie ratings. I wish I had your statistical skills, but I also thought it might be important to point out some more things to consider. First, this entire analysis relies on the objectivity of kids-in-mind raters who still must assign scores based on somewhat subjective criteria. Also, your methodology doesn’t consider the possibility for change over time which might be important to consider if you want to talk generally about the nature of MPAA ratings.

This hypothesis doesn’t make a lot of sense to me. Filmmakers make movies for a lot of reasons, to express themselves artistically, to try to make money, etc. Are there filmmakers who try to “get away with” as much profanity, nudity, and violence as they can. Sure, but I don’t think this reflects how all filmmakers work. A really interesting movie about ratings is the documentary “This Film is not yet Rated.” This film is about the line between R and NC-17 movies. It mostly tells the story of filmmakers who hope to get a R rating for their movie and get an NC-17 rating instead (just as a waring this film shows a lot of clips from R and NC-17 movies to illustrate differences in ratings). While this documentary only shows one side of the story (the filmmakers) it does give you a better idea of how the film industry works. So much is about marketing. Filmmakers create a film that they hope to market to a certain audience so they hope that it will get a certain rating. Of course they are disappointed when it doesn’t get that rating because it causes all kinds of marketing problems. One strength of this documentary is that it shows a wide range of filmmakers from John Waters (who I would argue wants to get away with as much as possible) to the director of “Boys don’t Cry” who seems really invested in her message. The special features include an interview with a filmmaker of a movie about a young black teenage girl who wants to play basketball(I can’t for the life of me remember the title). She really wanted to make this movie for young teenagers as it explores hard issues about teenage sexuality and was disappointed when it received an R rating. As I have gotten older I have moved away from looking so much at ratings and I have come to rethink how I classify films. Instead of thinking of movies on a scale from good (no sex/violence/language) to bad (a lot of sex/violence/language). I now think of movies on a scale from good (well written, saying something profound about human nature, inspiring, something that causes me to think) to bad (fluffy, mindless, pure entertainment with little substance).

Titanic a very soft R for a relatively short nude scene (5-6 seconds of 3+ hours) benefited from the rating by bringing in older teens (15 and up) focused on romance.

Titanic was rated PG-13.

The special features include an interview with a filmmaker of a movie about a young black teenage girl who wants to play basketball(I can’t for the life of me remember the title). She really wanted to make this movie for young teenagers as it explores hard issues about teenage sexuality and was disappointed when it received an R rating.

Sounds like Love and Basketball.

Justin, yes I think that is what the movie was. Good job!

Thanks, Joel. I agree that the value of these analyses rests on how valid and reliable the KiM raters are. I guess I figured the MPAA raters are clearly unreliable at times, so what would it hurt to compare their ratings to another set of potentially unreliable criteria? 🙂 No, seriously, it would of course be better if the KiM raters’ reliability could be assessed.

You make a good point about possible changes over time. I actually looked at whether the relationship between KiM and MPAA ratings changed over time in some of the regression models. In other words, I used them to ask the question of whether sex, violence, and profanity predict MPAA ratings the same way across time. It seems like a frequently heard criticism of MPAA ratings is that they’re shifting to assigning “softer” ratings to the same movies as time passes, so today’s PG-13 would be yesterday’s R.

There was some suggestion of changes over time, but the effects were inconsistent and very small in magnitude compared to the effects I did report, so I left them out for simplicity. KiM only started rating movies in the early 1990s, though, so that might not have been enough time for a change over time effect to show up.

Beatrice, thanks for the recommendation of This Film Is Not Yet Rated. It sounds interesting, particularly given that it agrees with my suspicion that movie makers are motivated by more than simply cramming in as much offensive material as they can.

One more point about the time issue, Joel. KiM actually acknowledges that their rating system has not been completely consistent over time, and that while they try to go back and re-do ratings to bring them up to the current standard, they’re not always up to date. (See the last question on this page.)

We’ve also seen This Film is Not yet Rated and really liked it (but yeah, it’s not for the squeamish). I’m also leery about assigning motive to filmmakers, because each one has a variety of reasons for putting the stuff into the movie that they do. I’m taking a class on film from Spain right now and we’ve watched some pretty interesting stuff–most of it would probably be at least R in America. I also generally use ratings as only one measure of what is in a movie, combined with sites like KiM as well to decide if I’m going to watch it. The profanity issue really bothers me, because more than a few F-words means an automatic R rating. For foreign movies, that means that nearly all of them end up being R no matter what else the content. I can think of at least a dozen movies from other countries that have an R rating just for profanity in the subtitles, and yet there is little other offensive content in there. The decision to translate certain words as the f-word is an interesting one, and often seems arbitraty to me. When I watch Spanish movies without subtitles I actually enjoy it more because I hardly notice the swears; as a non-native speaker they don’t have the same impact on me. I guess it also bothers me because I know a lot of people who miss out on good films because they don’t want to see an F-word in a subtitle. I believe the documentary talked about movies that are rated R simply because the director wanted them to not be for kids, and specficially added content to get them up to that rating. There are many, many movies and many reasons for having those things in there. You could also have a post about non-rated R movies that definitely aren’t for kids. Personally I’m appalled by people who automatically show their kids anything that has a G-rating; some of the messages in there aren’t for children. But I’m also really conservative with what I let my children watch. Of course, I’m also probably a hypocrite because I watch stuff that’s very “hard” and I don’t let them watch The Little Mermaid because of the theme of the movie.

Thanks for your comment, FoxyJ. That’s a great point about the foreign-to-U.S. translation so often resulting in R ratings. I wonder if that isn’t part of the reason many foreign movies are just left unrated when introduced into the U.S. market.

It’s a good reminder too to consider the question of how we use ratings when thinking about what to show our kids. (I guess that site is called Kids in Mind, after all–you would think I would have noticed that. 🙂 ) My wife and I are pretty laissez-faire when it comes to regulating what our kids watch, but we do discuss bad stuff with them during and after at least some of the time. And it’s surprising sometimes what bugs them. A few days ago, we all watched A Christmas Story while putting up Christmas decorations, and my older son (who is eight) left the room for the scene when Flick gets his tongue stuck to the metal pole.

Two other interesting things about the documentary.

1-Different cultures have different standards about movies. For example, they talked about how sex is viewed as being a lot worse than violence in the U.S., but how it is the opposite in Europe.

2-They also talked about how the raters view different portrayals of sex. For example, sexual positions other than the missionary position contribute to a higher rating. Also, there were some interesting comments about the sexuality of women. Sex scenes that focus more on the female perspective are generally rated higher than sex scenes from the male perspective. Before I watched this movie I hadn’t realized that the majority of sex scenes that we see on t.v. and in the movies follow the same cookie cutter scenario. I was really surprised at how different the sex scenes from the female perspective were because I never imagined that these scenes could be shot in any other way other than the standard. The creators of the documentary and many of the filmmakers commented about how as a society we are less comfortable with the sexuality of women than the sexuality of men.

As Jerry mentions, money influences the rating before a movie is made; money also influences the rating after the movie is made. The major Hollywood studios can and do bully the MPAA into getting the coveted PG-13 rating for their films–this is especially likely with big budget blockbuster movies. Independant films lack the clout to do this; producers of some lower budget films may not care. Movies such as “The Dark Knight” and “The Return of the King” easily could have been rated R and there definitely is more objectionable material on those films than in a number of R rated movies I have seen. The real Hollywood model isn’t to put as much offensive material as possible in an R movie, it is to put as much offensive material as possible in a PG-13 movie.

Good analysis–it makes a difference when someone actually has a background in statistics!

I didn’t have any direct statistical evidence when writing the original post (and it looks like there isn’t any) that filmmakers actually *were* adding in extra gratuitous content to already-rated-R movies, only that the system was designed so they *could* without penalty. The incentive structure of the ratings is designed such that there would be nothing to stop or discourage them from doing so.

(As noted, many filmmakers still choose movie content based on its artistic merits, not just ‘what can we get away with?’ However, let’s not overstate the ‘art’ component in movies–a great many movies are made simply to appeal to certain demographics and make money. Action movies frequently include nudity not just because they can get away with it within the same rating, but because young guys who primarily like action movies tend to like seeing naked women on the screen. It’s not rocket science…)

Two comments:

One, the numbers seem to show that the greatest link between two of the PSV categories is sex and profanity (they are the two more likely to be together in a movie, if I’m understanding it correctly).

However, there’s a caveat here: in Kids-in-Mind’s analysis at least, sexual dialogue and innuendo is classified as “profanity” in most cases, rather than “Sex” (which is strictly nudity and sexual acts). Thus what gets counted in the Profanity score for a movie is oftentimes really just another component of the “Sex” content from how we as viewers would probably classify it.

That distinction undoubtedly has a large effect on the high correlation score between sex and profanity, and unfortunately without a means to break out just regular swear words in a movie’s profanity score, it may not be possible to really check whether sex and profanity are really the “bestest friends” as the raw numbers indicate.

Two: the fundamental problem with doing a statistical analysis at all on ratings is that we don’t have a good basis for comparing different categories. Is a “6” in Violence really comparable to a “6” in Sex or Profanity? The only thing we know about a “6” in Violence is that is was judged to be slightly more violent than other movies with a “5” and slightly less violent than a “7”.

The fact that there are more G-rated movies with “high” violent content than G-rated movies with comparable sex/profanity content doesn’t necessarily indicate that the US is more ‘tolerant’ of violence, since we have no means of comparing what the equivalence is between the categories.

What’s considered to be G-rated ‘violence’ (cars crashing, things blowing up, scary monsters, even) doesn’t really have a sexual equivalent, hence perhaps the reason ratings are stricter with sexual content, even though on the arbitrary ratings/judgment scale they are given the same numerical value in the end…

Ziff, this is fun stuff.

I think there are other explanations for what’s going here, however. You claim that “The Baron’s argument leads to the prediction that there aren’t any (or many) “soft” R rated movies.”

If the argument is that ALL R-rated movies have extra hard material put in because they are already are R, then I think your statistics do indeed disprove the point. However, I don’t think he’s making that broad a claim. It’s pretty obviously not true all of the time, or even most of the time, for that matter.

I do think Kevin’s point is a good one, because I believe it’s not infrequently true. It’s particularly true in movies that are marketed towards the college-male demographic. Just as a frequent movie watcher, I have noticed that there’s a big gap between the R movies aimed at young males and the PG-13 movies aimed at young males.

This fact doesn’t preclude the existence of soft R’s in other genres. And it’s hard to say that the chart reveals anything at all on this, because there’s no way to know what it would look like if this factor weren’t in play. It’s possible that the red bars in the chart would have had a much sharper decline and that this phenomenon is causing an unnaturally bloated right side (note that the bar under 20 is a little higher than the one before it.)

I do think your graphs and level of nudity would be altered by taking money into account. Very few high budget movies have any nudity. I think you would see a much higher leaning towards violence which tends to have a lot more money behind it.

Hello, Baron, thanks for stopping by and for asking a question interesting enough to get me to write a post. (That’s really saying something since I’m typically such a lazy blogger!) You make an excellent point about how sexual dialog gets coded by the KiM raters affecting the profanity-sex correlation. I hadn’t even looked at the KiM data that closely to see that.

Great point too about the difficulty in comparing the different KiM scales to each other. I definitely agree that, as it stands, the points on the scales aren’t likely to be equivalent. I do think, however, that since the distinction between “some” and “none” is a more reliable one than 4 vs. 5 or 2 vs. 3, that the difference in profanity, sex, and violence levels in G movies likely really is meaningful. That is, since the profanity and sex means are so near zero and the violence mean is some distance from zero, I think that it is possible to say that G rated movies have more violence than profanity or sex.

Thanks, Eric, I’m glad you enjoyed it. Sorry if I sounded like I was arguing that the Baron’s point was that all R rated movies will be well beyond the threshold. I was trying to look at the weaker version of the hypothesis, that some R rated movies that would otherwise be just beyond the threshold get pushed up to being well beyond the threshold. Certainly you’re right that my (semi) analysis doesn’t refute the Baron’s hypothesis, but I do think the smoothness of the distribution is more consistent with the hypothesis that this isn’t happening.

But I like your idea of looking at specific genres of movies. Perhaps noticeable gaps would show up in the distribution for movies targeting young men, as you suggest. Or perhaps there’s a gap in the overall distribution and it’s just not a big one. I guess it all boils down to how many movies we expect to be affected in this way.

Of course, truth be told, I’m not all that committed to my version of the hypothesis. As you can probably guess, I was more trying to use it as a jumping off point to fiddle around a bunch with some potentially interesting data.

“this only encourages filmmakers to add more “R-rated” content to their movie, since obviously if they know they’re getting an R for violence already, why NOT add a lot of profanity and nudity as well? The rating is going to be the same, either way”

I work in film and TV in Los Angeles and I think the above idea is flawed because of when films are rated in the process of finishing and distributing a film. The MPAA rating often comes too late in the process to have a significant effect on editorial decisions. Three thoughts:

1) During the editorial process the executive producers and the studio do indeed have an eye as to what rating they are trying to achieve, depending on the film and the contracts the rating will be more or less important. But during shooting and post production they are making an educated guess as to what the end rating will be, they don’t actually know until the film gets its rating which can happen a significant time after the film is completed. To go back into a show and make changes after the rating is established can be costly and throw off the release schedule. So if studios are responding to the MPAA they are doing so based on their perception about what the rating will be and not the actual rating itself.

2) Sometimes filmmakers do reopen a film in response to a MPAA rating but again its a troublesome process, it costs additional money and time which no one has late in the process. The stories one hears about this happening usually involve a film that has been bumped up to a stricter rating category than the studio expected say from R to NC-17. That move represented a large cut in potential audience so the offending scenes are re-cut.

3) In the world of TV the pressure is always in one direction, when in doubt standards and practices folks tend to be very conservative often gutting content to make it clean enough. Since TV has less of an air or creative autonomy S&P is more active than one might think in determining content. Of course in TV the MPAA plays no role so it’s about audience expectations, the networks internal standards (yes they do have them) and the FCC.

Can I pause to praise the 7-point Kids-in-Mind score, which is evidently compatible with any rating from G to R?

Of course, there’s a big difference between a 2-3-2 movie and a 0-7-0 movie in terms of content, so the fact that a lot of different ratings add up to the same number isn’t very revealing…

As always, Ziff, I greatly enjoyed the statistical analysis. Thanks!

In case anyone’s curious, here are the 7 point total KiM score movies rated G (with sex, violence, and profanity scores):

Babe, Pig in the City (1998) – 1-5-1

Cars (2006) – 2-3-2

Chicken Little (2005) – 1-4-2

Ice Princess (2005) – 3-3-1

102 Dalmations (2000) – 1-4-2

The Princess Diaries (2001) – 3-3-1

The Santa Clause 2 (2002) – 2-3-2

And the ones rated R:

Besieged (1999) – 5-2-0

Beyond Rangoon (1995) – 0-5-2

Beyond Silence (1996) – 4-2-1

Mr. Saturday Night (1992) – 0-1-6

Consistent with your prediction, Baron (#22), the R rated movies have higher maximums on average.

Thanks for your comments, Douglas. It’s great to hear the perspective of an insider.

Regarding your point #3, has the TV pressure changed over time? Steven Johnson, in Everything Bad Is Good for You, argued that while TV used to always lean toward the least offensive content, the rise of cable has allowed for much more interesting and challenging shows. I would be interested to hear whether this has been true in your experience.

Just to consider:

I always figured the establishment of the PG-13 rating was to allow producers to put in content that they previously couldn’t without getting an R rating – that PG-13 movies often function now as “soft R movies” based on former standards. I am old enough to realize that MANY of the PG-13 movies my children see now would have been rated R when I was a teenager.

Iow, that category allows producers of movies to market content unofficially to the 10-16 year old demographic content that previously would only have been kosher to market to the 17 & up demographic – and not even to all of the 17-18 year olds still living at home with conservative parents.

I also could have added TV-14 (the PG-13-equivalent rating between TV-PG and TV-M) as the chance to market television shows to teenagers that previously would have been limited to adults.

Ray, my understanding was that, at least at the time, the PG-13 rating was cooked up because of concern over what kinds of stuff was being put in movies getting PG ratings. I thought Indiana Jones and the Temple of Doom, which got a PG rating, was a crucial case showing the need for finer ratings distinctions between PG and R.

I don’t doubt that you’re right that the ultimate effect of the creation of PG-13 has been the wider availability of some content that would have been considered R rated before. But at least at the time, I think that PG-13 was seen as making a new place for the “harder” PGs rather than the “softer” Rs.

But I’m certainly not an expert in the history of movie ratings; I could have this wrong. What would be really interesting is if the KiM raters would go back and rate movies from before the (1984-ish?) introduction of the PG-13 rating so we could compare PGs and Rs from before with PGs, PG-13s, and Rs since.

Ziff #24, thinking in averages here isn’t quite the right move, though. The argument is about the amazing degree of overlap among ratings, not about the mean separation. So while mean tendencies tell us that there is some information in the ratings, specific exemplars tell us that there’s clearly something other than content going into the ratings, possibly including aesthetics, genre, or studio politics.

For instance, from your list, Babe, Pig in the City, has the same maximum as Besieged and Beyond Rangoon, and a higher maximum than Beyond Silence. Chicken Little and 102 Dalmatians have the same maximum as Beyond Silence. These are certainly anomalies, but they’re interesting because they suggest that a film can possibly earn a lower rating than it arguably deserves simply by (a) packaging itself as a kid’s film and (b) being distributed by Disney….

If you look at movies from before the advent of the PG-13 rating, it’s seems to me like the PG-13 was meant to be a place for harder PG’s rather than softer R’s. Three PG movies from that era that come immediately to mind as having no business being PG are The Graduate, The Outlaw Josie Wales, and Kramer vs. Kramer, all of which are very adult thematically and feature at least PG-13 levels of nudity.

RT, I guess I was thinking of the Baron’s original point that it’s the highest score–the worst category–that drives the rating more than the total. But of course as you point out, there are lots of exceptions that work in predictable ways.

Ziff #25,

Its difficult for me to know the answer to that question. Since I have not been in TV for a great length of time, I’m a post super and I’ve delivered only about 90 episodes of network and cable shows. I don’t know what it was like 10 or more years ago.

What I will say is that the networks respond to what they see and hear in the cuts we deliver to them. It’s not like decisions regarding how raunchy or daring content will be are directly made at the network level for all shows before they go into production. The networks just want to please their audiences.

So it’s complicated, some shows challenge FCC and internal standards in significant ways and others don’t in both cases there are many conversations and a lot of back and forth. In all cases network S&P folks are always thinking about who might be offended, who might get mad, what the implications of a certain joke or bit of content are, etc.

Some cable channels do like to push the limits but if anything is going to have a shelf life in syndication then clean versions of the show are created. This is true even of show that have reputations for hard language and violence. Sometimes the studio will create a syndication master as part of posting the series. Sometimes they will do a re-pack after the face.

Regardless, those of us who work in post are dealing with these issues on a daily basis.

How disturbing that after months of spiritual pondering like Enos alone in the wilderness I return to “the world” only to find Brother Ziff spending far too much time discussing banal immoral things. Think of the time and energy that you could have put into something productive, like identifying which of us in the church were what rank of general in the war in heaven, or determining the route Moroni took between Cerro Vigia and Cumorah. Sometimes I really wonder what this world is coming to.

Brother Knudsen III, it’s so good to see you around these parts again!

I’m rather surprised that you would consider these immoral things “banal.” In fact, I think they’re rather exciting immoral things. In fact, I suggest that using the KiM ratings, we can find out how exciting a movie is by multiplying its three individual scores. So a movie scoring 10-10-10 would score a 1000, the maximum possible, on the excitement scale. Isn’t that a little interesting?

In any case, I hope you stick around to find more things to be appalled at, even if I don’t get around to figuring out premortal ranks for everyone. 🙂

Brother Knudsen, I’m afraid you couldn’t be more right–Brother Ziff is in fact obsessed with all things worldly. In fact, if you look back through his posts, you’ll discover that this isn’t the first time he’s casually used such worldly terms as “regression,” and devoted his time to analyzing sinful media. In fact, he’s gone so far as to apply his statistics to the Great and Spacious Building itself–the bloggernacle! All we can do at this point, I’m afraid, is to pray for his soul.

You are right brother Ziff — thank you for catching my spelling error — it happens from time to time due to the cumbersomeness of translating higher thoughts to, you know, regular people speech and what not. But I meant “anal” — my mistake.

Lynnette, I would pray for his soul, and even submit his name to the temple, but I’m afraid my year supply of little pencils has run dry. After this past election I didn’t think we would still be here this long.